Last Update: July 5, 2022

Evaluability Assessments answer the question: Is there enough available information to justify the time and cost of a full-scale evaluation? If they are done early enough during implementation, they can identify basic problems in design which can guide remedial action.

One old study set the stage for 8 recent detailed Guides which synthesize earlier work and provide useful advice on whether, when and how to undertake an evaluability assessment – or to use other approaches to assessing project or programme design integrity.

Level of difficulty: Moderate

Length: 3-72 pages

Primarily useful for: Evaluation managers, RBM specialists doing evaluation assessments

Most useful: DFID Guide to Planning Evaluability Assessments

Most interesting: Program Management and the Federal Evaluator (1974)

Limitations: These guides tell us what needs to be done, but they require people with the process skills to do it all.

This report also includes annexes summarizing different suggested models of stages suggested by different researchers and agencies, in conducting an evaluability assessment, and even a draft Terms of Reference for an evaluability assessment – something which seems to have been used almost verbatim subsequently by many aid agencies.

Increasingly, these assessments are now being used early in the implementation period, as an evaluability assessment template produced by the United Nations Office on Drugs and Crime puts it, as a means of cross-checking the validity of the original design, with the possible purpose of enabling mid-course design changes.

This is a short, easy to read checklist on key questions to consider before deciding on whether and how to do an evaluation in conflict situations. It takes the checklist produced in 2013 by Rick Davies, for DFID, and expanded the content of some of those checklist issues in language intended to be easier for programme managers and others who are not evaluation specialists, to understand raising some practical questions to consider when assessing the costs and benefits of evaluations.

In a similar vein, the CDA Collaborative has a number of interesting guides on whether to use evaluability assessments in peacebuilding and conflict situations, or alternatively, to use what is called programme quality assessment. Many programmes which are not focused specifically on peacebuilding or conflict resolution could benefit from considering the alternative approaches:

Evaluability Assessments answer the question: Is there enough available information to justify the time and cost of a full-scale evaluation? If they are done early enough during implementation, they can identify basic problems in design which can guide remedial action.

One old study set the stage for 8 recent detailed Guides which synthesize earlier work and provide useful advice on whether, when and how to undertake an evaluability assessment – or to use other approaches to assessing project or programme design integrity.

|

| Evaluability Assessment Guides |

Level of difficulty: Moderate

Length: 3-72 pages

Primarily useful for: Evaluation managers, RBM specialists doing evaluation assessments

Most useful: DFID Guide to Planning Evaluability Assessments

Most interesting: Program Management and the Federal Evaluator (1974)

Limitations: These guides tell us what needs to be done, but they require people with the process skills to do it all.

The History of Evaluability Assessments

Evaluability assessments, pre-assessments or exploratory evaluations, as they were known in some cases 40 years ago, have been used for many years in public health research where the term “evaluable” refers to ‘Patients whose response to a treatment can be measured because enough information has been collected”, education, and justice (PDF) and the term has academic antecedents in testing mathematical propositions, far back into the 19th Century.

The Foundation Evaluability Assessment Document

The foundation evaluability concepts for social programmes were initially presented, from what I can see in a 1974 Urban Institute study, one of many emerging during implementation studies of the Johnson administration's Great Society Program, published as "Program Management and the Federal Evaluator" in the Public Administration Review, included in a 1977 volume of Readings in Evaluation Research, available through Google Books.

|

| The Foundation Evaluability Assessment article |

It advocated looking at issues which are at the heart of current guides to evaluability assessment - and for that matter, at the heart of solid results-based design:

- Whether there is a clearly defined problem addressed in design

- If the intervention is clearly defined

- If the short and longer term results are defined clearly enough to be measurable

- if “the logic of assumptions inking expenditure of resources, the implementation of a program intervention , the immediate outcome to be caused by that intervention, and the resulting impact” are “specified or understood clearly enough to permit testing them”.

- Whether managers are capable of and motivated to using performance data for concrete management decisions.

While there are many other guides to evaluability assessment which are much more detailed of more practical utility today, the most important core concepts are here in this 1974 study, and this short, 43-year old article is still worth reading for its insights on clarity on language, assumptions and results.

Leonard Rudman’s later 1980 book Planning Useful Evaluations - Evaluability Assessment set out a detailed approach to dealing with all of these issues.

Changes in Evaluability Assessment Utilization

Evaluability Assessments started out as ways of improving the design of evaluations, to increase the chances they will be useful to the people who are funding and implementing activities, and in recent years, with a substantial increase in the number of evaluations of international development projects, many agencies, including UNICEF, the World Bank, UNDP and numerous bilateral donors have incorporated evaluability assessments as part of the project cycle, in theory during the design process, and after design, during implementation and before a decision is made to pay for a full-scale evaluation.

Many Evaluability Assessment Guides have been produced in recent years, but here are just a few worth noting.

1. The DFID Guide to Planning Evaluability Assessments, by Rick Davies

The DFID Guide to Planning Evaluability Assessments (PDF) (2013-56 pages) produced by Rick Davies, who has a host of useful evaluation documents and websites to his credit, did a detailed job of synthesizing the literature, to provide suggestions on when and how to proceed with an assessment, and what the possible consequence of doing this could be for project or programme design changes.

"In an ideal world projects would be well designed. One aspects of their good design would be their evaluability. Evaluability Assessments would not be needed, other thanas an aspect of a quality assurance process closely associated with project approval (e.g. as used by IADB). In reality there are many reasons why approved project designs are incomplete and flawed, including:

- Political needs may drive the advocacy of particular projects and override technical concerns about coherence and quality.

- Project design processes can take much longer than expected, and then come under pressure to be completed.

- In projects with multiple partners and decentralised decision making a de facto blueprint planning process may not be appropriate. Project objectives and strategiesmay have to be “discovered” through on-going discussions.

- Expectations about how projects should be evaluated are expanding, along with the knowledge required to address those expectations.

.... In these contexts Evaluability Assessments are always likely to be needed in a postproject design period, and will be needed to inform good evaluation planning...

…Many problems of evaluability have their origins in weak project design. Some of these can be addressed by engagement of evaluators at the design stage, through evaluability checks or otherwise. However project design problems are also likely to emerge during implementation, for multiple reasons. An Evaluability Assessment during implementation should include attention to project design and it should be recognised that this may lead to a necessary re-working of the intervention logic." [p. 9]

The DFID guide goes through every step of planning and executing an evaluability assessment:

- When evaluability assessments are appropriate

- Who should conduct the assessment – the types of expertise required for evaluability assessments

- How to contract evaluability assessments

- How long the assessments could take

- How much it will cost to do an evaluability assessment

- Different processes required for an evaluability assessment

- Detailed lists of questions to be dealt with when assessing project design, assessing availability of indicator data, and stakeholder participation in the evaluability assessment

- The types of reports which should come out of an evaluability assessment

- The risks of undertaking evaluability assessments.

"In an ideal world projects would be well designed. One aspects of their good design would be their evaluability. Evaluability Assessments would not be needed, other thanas an aspect of a quality assurance process closely associated with project approval (e.g. as used by IADB). In reality there are many reasons why approved project designs are incomplete and flawed, including:

- Political needs may drive the advocacy of particular projects and override technical concerns about coherence and quality.

- Project design processes can take much longer than expected, and then come under pressure to be completed.

- In projects with multiple partners and decentralised decision making a de facto blueprint planning process may not be appropriate. Project objectives and strategiesmay have to be “discovered” through on-going discussions.

- Expectations about how projects should be evaluated are expanding, along with the knowledge required to address those expectations.

.... In these contexts Evaluability Assessments are always likely to be needed in a postproject design period, and will be needed to inform good evaluation planning...

…Many problems of evaluability have their origins in weak project design. Some of these can be addressed by engagement of evaluators at the design stage, through evaluability checks or otherwise. However project design problems are also likely to emerge during implementation, for multiple reasons. An Evaluability Assessment during implementation should include attention to project design and it should be recognised that this may lead to a necessary re-working of the intervention logic." [p. 9]

|

| Project Design Checklist Click to enlarge |

This report also includes annexes summarizing different suggested models of stages suggested by different researchers and agencies, in conducting an evaluability assessment, and even a draft Terms of Reference for an evaluability assessment – something which seems to have been used almost verbatim subsequently by many aid agencies.

2. The UN Office on Drugs and Crime Evaluability Assessment Template

|

| Evaluability Assessment Template - UN Office on Drugs and Crime Click to enlarge |

Increasingly, these assessments are now being used early in the implementation period, as an evaluability assessment template produced by the United Nations Office on Drugs and Crime puts it, as a means of cross-checking the validity of the original design, with the possible purpose of enabling mid-course design changes.

The overall purpose of an evaluability assessment is to decide whether an evaluation is worthwhile in terms of its likely benefits, consequences and costs. Also, the purpose is to decide whether a programme needs to be modified, whether it should go ahead or be stopped.Timing:The evaluability assessment is appropriate early in the programme cycle - when the programme is being designed but has not yet become operational. A second opinion on a programme and the strength of its design and logic is only worthwhile at this early stage - when something can be done to remedy any weaknesses

This brief 3-page checklist may prove a bit too constrictive in its decision-making path to be applied literally in all evaluability assessments in other fields, but the questions it asks about the credibility of the design, data collection systems, the utility of an evaluation for management are worth reviewing, as is the advice to use theory of change workshops to test logic models and programme theories.

They are, in this context, quality control activities, in effect very early mid-term reassessments of the Results-Based Management process.

3. Evaluability Assessment for Impact Evaluation

This guide (24 pages) was produced by Greet Peersman Irene Guijt and Tiina Pasanen for the Overseas Development Institute.

This guide provides “guidance, checklists and decision support” in a simple and easy to read format, expanding on the checklists provided by Rick Davies in the 2013 DFID guide, but aimed primarily to be useful for those who want to use the assessment not for project or programme design, but prior to deciding whether to undertake a full scale impact evaluation. It provides a series of easy to read checklists on questions on designing and conducting an assessment. with some practical examples of the resources required for an evaluability assessment.

|

| Level of Effort required for an Evaluability Assessment Click to enlarge |

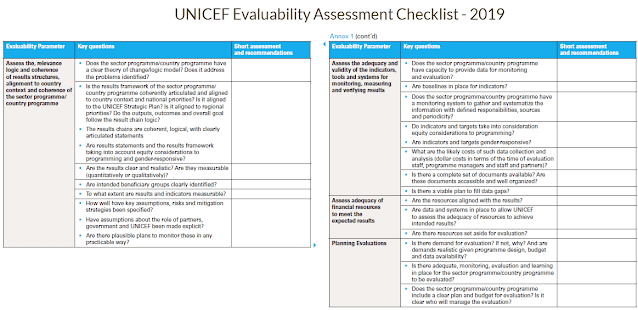

4. The UNICEF Guidance Note for Conducting Evaluability Assessments

(2019 - 13 pages)

The UNICEF 2018 revised evaluation policy noted that one of the most difficult challenges in improving the evidence-based culture in the organization is "risk of insufficient evaluation experts, especially at the country level", (p. 20) and cites the relevance of evaluability in identifying this risk, and laying the ground work for country based evaluation capacity development.

This short but practical UNICEF evaluability assessment guide summarizes some of the main points made by Rick Davies in his detailed 2013 review, and other sources, and puts the rationale and procedures for evaluability assessments firmly in the UNICEF country programming cycle, at those points where practical decisions on design and operations of projects and programmes are still possible.

This guide summarizes the steps to be taken during project design as part of the quality assurance process, during the very early stages of the country programme 5-year cycle, to learn how the design's original intervention assumptions and results framework have been operationalized during actual implementation, and near the end of a project when the feasibility and design of a country programme evaluation are being considered.

Guides for Assessing Evaluability in Conflict Situations

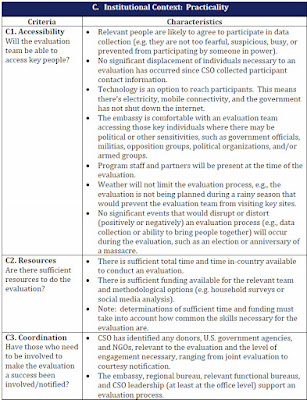

Four guides deal with a very practical set of questions about whether to undertake evaluations in conflict situations.5. The CSO Evaluability Assessment Checklist: Working Draft

(2017, 13 p,)

This guide was produced by Cheyanne Scharbatke-Church for the U.S. State Department’s Bureau of Conflict and Stabilization Operations. While it does not appear to be available on their website, it is available on the American Evaluation Association’s website, which has a large number of other very useful resources.

|

| Practical questions for assessing evaluability in conflict situations Click to enlarge |

In a similar vein, the CDA Collaborative has a number of interesting guides on whether to use evaluability assessments in peacebuilding and conflict situations, or alternatively, to use what is called programme quality assessment. Many programmes which are not focused specifically on peacebuilding or conflict resolution could benefit from considering the alternative approaches:

6. Evaluability Assessments in Peacebuilding Progamming

(2012 – 21 p.)

This guide, written by Cordula Reimann lists 63 criteria for assessing whether evaluability in peacebuilding situations is high, medium or low.

|

| Assessing evaluability of programme design Click to enlarge |

7. An Alternative to Formal Evaluation of Peacekeeping

(2012 – 34 p)

Cordula Reimann, Diana Chigas & Peter Woodrow compare the utility of and resources required for three approaches – formal evaluation, Program Quality Assessment, and internal Reflection Exercises, for organizations in different situations, and with different needs. People working in areas not related to conflict could find the Program Quality Assessment model a useful alternative to a full evaluability assessment, also.

|

| Comparing Reflection, Program Quality Assessment and Evaluation Click to enlarge |

8. Thinking Evaluatively In Peacebuilding Design, Implementation And Monitoring

(2016 – 72 p.)

This guide brings both of these together in a longer, more detailed assessment of how the utility of the different approaches can be assessed in choosing the right evaluative option for organizations in different situations.

|

| Criteria for choosing evaluation options Click to enlarge |

Conclusion: Using Results Based Management in Evaluability Assessments

Evaluability Assessments apply basic concepts of Results-Based Management to determining, as the original 1974 study proposed, if the original project or programme design:

- Addresses a clear problem,

- Has an intervention strategy which is logical, and shared by stakeholders,

- Has access to information for results indicators which will describe or measure progress against the original problem, and which are likely to be useful to funding and decision-making authorities.

Some evaluability assessments have been limited to document review, but in my experience these can be sterile, almost academic undertakings, not likely to provide solid information on stakeholder agreement to the logic of the intervention.

But, as part of the evaluability assessment process, if field work is recognized as a necessary component, the basic components of Results – Based design will be essential in conducting or engaging in evaluability assessments.

Getting to the stage where we understand if there is enough coherence in a programme or project to evaluate it, means using many of the same skills and processes of stakeholder consultation which are they key to solid Results-Based design:

- Identifying stakeholders

- Getting stakeholder agreement on actionable problems

- Identifying with stakeholders possibly multiple causes of a problem

- Agreeing on which causes will be the target of the intervention

- Clarifying assumptions about what interventions work and what is appropriate in the context of the intervention

- Getting stakeholder agreement on clear short, mid-term and long-term desired results

- Identifying internal and external risks

- Identifying practical indicators to verify progress on results, and collecting baseline data on these

- Getting agreement on data collection and data reporting responsibility and format

- Getting stakeholder agreement on what inputs, and activities will be necessary to achieve results

- Putting this together into a coherent theory of change with all of the stakeholders

If we can successfully engage with stakeholders in these processes, we have a reasonable chance of conducting a useful evaluability assessment.

The bottom line: The earliest of these studies outlined the basics, and recent guides provide the details on how to do it, but we still need the people with process skills to make evaluability assessments more than just a buzzword.

_____________________________________________________________

GREG ARMSTRONG

Greg Armstrong is a Results-Based Management specialist who focuses on the use of clear language in RBM training, and in the creation of usable planning, monitoring and reporting frameworks. For links to more Results-Based Management Handbooks and Guides, go to the RBM Training website.