by Greg Armstrong

[Updated October 2018]

International aid agencies sometimes try to use standard performance indicators to make aggregation of agency-level results easier, but there are real dangers in using these at the project level as Sara Holzapfel explains in her detailed report The Role of Indicators in Development Cooperation

Level of Complexity: Complex

Length: 236 pages (the full version, 38 pages for an earlier discussion paper)

Languages: English

Primarily Useful for: Agency-level RBM experts

Most Useful: Comparisons of how aid agencies use standard indicators at different results levels - particularly Appendix A5, p. 205-233.

Limitations: Realistically, there are no quick answers on how to aggregate project-level results without using standard indicators

For those interested in the details of the kinds of indicators different agencies use for different types of results, this report has a series of very useful annexes. Among the most useful:

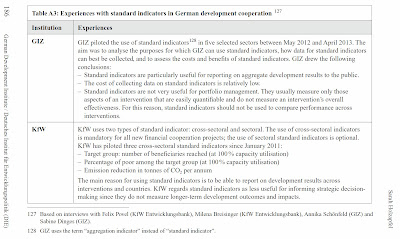

Nevertheless, a table on page 186 summarizes the German aid agencies' experience to that date, with standard indicators:

[Updated October 2018]

International aid agencies sometimes try to use standard performance indicators to make aggregation of agency-level results easier, but there are real dangers in using these at the project level as Sara Holzapfel explains in her detailed report The Role of Indicators in Development Cooperation

Level of Complexity: Complex

Length: 236 pages (the full version, 38 pages for an earlier discussion paper)

Languages: English

Primarily Useful for: Agency-level RBM experts

Most Useful: Comparisons of how aid agencies use standard indicators at different results levels - particularly Appendix A5, p. 205-233.

Limitations: Realistically, there are no quick answers on how to aggregate project-level results without using standard indicators

Background

Many of us have encountered development projects where, in the attempt to demonstrate agency-wide accountability for results the aid agency designing the project has moved away from project-specific results indicators which measure capacity development, policy change or changes in implementation, and attempted to use agency-level standard indicators at the project level.

As Sara Holzapfel notes in her detailed assessment of how aid agencies use standard performance indicators, published by the: German Development Institute this trend has arisen both from a concern with accountability and a real interest in whether development programmes contribute to agreed upon goals.

“Amid rising criticism of aid effectiveness coupled with tight budgets in many traditional donor countries at a time of economic crisis, donor agencies are under pressure to deliver more value for money and to provide evidence of the positive effects of development cooperation. In response to these pressures, more and more development agencies are adopting agency results frameworks for monitoring and managing their progress in pursuing their strategic objectives and for reporting on performance.”

Even where there is apparent, substantial progress on broad, long-term indicators, such as the millennium development goals questions still remain about whether the indicators for these goals, themselves, can tell us anything about whether development interventions contribute effectively to long term results, whether the indicators describe progress against problems effectively and as a 2014 report on MDG targets and indicators for human development and human rights published in the Journal of Human Development and Capabilities noted

“The unintended consequences revealed in the Project cannot merely be ascribed to the goals and targets having been selected or implemented badly, as is sometimes claimed. They are more fundamental structural issues arising from the nature of quantification and the nested structure of goals, targets and indicators that the MDGs created.”

Standard and custom development indicators

In her 2014 paper, Sara Holzapfel describes the difference between standard (or common) and what she refers to as “custom indicators” this way:

“In this study, an indicator is said to be a custom indicator if it is tailored to describe a specific phenomenon or to estimate a distinct change in unique circumstances. Standard indicators, by contrast, are indicators that have a common definition, method of measurement and interpretation. They produce data that can be aggregated and compared across interventions, countries or regions. Due to their characteristics, custom indicators may be said to be particularly useful for monitoring the performance of individual interventions, whereas standard indicators serve primarily to report on results at an aggregate level and to inform decision-making.” [p. 3]

Standard indicators in strategic planning

The study also usefully puts the whole issue of the utility of standard indicators in the context of where they are used – including discussion of the use of standard indicators for strategic planning

- Using indicators to assess countries’ relative needs for assistance,

- Using indicators to assess relative country performance towards development results,

- Using indicators to formulatie aid agency strategies and plans, determining where to put aid money – by country, region and sector

Standard indicators for long-term results might be relevant for individual agency performance at the country level, or, as this study suggests, to assist aid agencies and national governments in decisions about where resources should go. And, Hozapfel’s study suggests, standard indicators are also useful for aid agencies at the lowest level of the results chain, to measure inputs and completion of activities – things often referred to as Outputs. This would include things like numbers of schools built, number of teachers or health workers trained.

Comparisons of Donor Agency Indicators

|

| Comparison of donor agency indicators |

- Table A4, pages 187-204, provides examples of indicators for country-level results for poverty reduction, health, education, governance, infrastructure, agriculture and food security, finance, regional integration and conflict, used by The World Bank, The Asian Development Bank, the African Development Bank, the Interamerican Development Bank, DfID and UNDP.

- Table A5, pages 205 - 233, provides examples of Output and Outcome indicators for energy and climate change, transport, water and sanitation, agriculture, irrigation and food security, education, health, finance, humanitarian assistance, land and property rights, private sector development and employment, institutional development and governance, from, again The World Bank, ADB, AfDB, IDB, and DfID, but also from the Millennium Challenge Corporation and AusAid,.

|

| Click to enlarge |

An interesting and curious variation here, is that the German aid agencies are not included in this survey, although the study was published by German Development Institute. This is unfortunate, because GIZ for one, plays an important international role in support of governance reform and other topics, as other agencies such as GAC appear to be less visible in support in geographic areas such as Southeast Asia. A footnote tells us that this is because, as of 2014:

The experiences of German development cooperation agencies will not be discussed here since their initiatives undertaken to aggregate results at the agency-level are still in a piloting phase. For example, the Kreditanstalt für Wiederaufbau (KfW) and the Deutsche Gesellschaft für Internationale Zusammenarbeit (GIZ) have only recently started piloting standard indicators for agency-level performance measurement..."

Nevertheless, a table on page 186 summarizes the German aid agencies' experience to that date, with standard indicators:

|

| Click to enlarge |

Standard indicators for short term completed activities

While there are some useful indicators here, as the report points out, one potential problem could be that aid agencies know it will be difficult to aggregate longer-term capacity development results, and they could therefore focus too much on what is countable – short-term delivery of products or completed activities (outputs, as some agencies call them) rather than real changes or results.

Indicators in real life

In my experience reviewing agency-wide and country programme indicators the closer we get to examining project-level results – not just completed activities, but real changes, the middle of the results chain, the less likely it is that standard indicators will help us understand progress on the necessary foundation results of capacity development, and changes in attitude, policy , and implementation behaviour.

There are cases where using indicators for Millennium Development Goals or, the subsequent Sustainable Development Goals might be possible at a meaningful project level ( the prevalence of underweight children under five years of age, for example, in a limited geographic area). But most of the time the indicators which work at the national or international level over a ten or 15 year period such the share of women in wage employment in the non-agricultural sector, or carbon dioxide emissions per capita will be too broad to help define achievable local results or to adequately describe results for individual projects working in a limited geographic area, over a short period of time – 5 to 10 years, for example.

There are too many intervening steps in a genuinely useful logic model or theory of change, between what problems are defined and what initiatives taken at the local level, for broad, standard indicators to be useful guides to action or evaluation, if applied to different conditions, and different projects.

In many of these projects the focus is on improving the capacity of change agents (teachers, agricultural agents, health workers or police, for example) to work with the population which will, we hope, have its lives changed for the better, over time and what we need are locally relevant indicators on these topics.

Losing ownership on indicators and data collection

If we listen to most aid agencies’ espoused theory of how indicators are developed, we are supposed to be developing performance indicators with the partner agencies, change agents, and those affected by the projects, not imposing indicators – or results for that matter – from the top down.

But where even the most knowledgeable and well –meaning experts may come to a consensus about what is needed in the very long term to measure results, they will not be familiar enough with conditions, problems, needs or projects on the ground in the hundreds, thousands of places where real development work takes place. And where indicators are imposed on people, they often show little enthusiasm for data collection.

Top-Down vs Bottom-Up indicators

It comes down to this: Indicators which can accurately measure progress towards results at the individual project most probably will not be identical to programme-wide, agency-wide, or world-wide international indicators. By forcing such indicators upon people working at a country, programme or project level, we are in danger of making the indicators meaningless for real results where people actually work – in projects, in schools, in individual government agencies, or in villages.

This top-down approach to indicators is ultimately undermining the credibility of results-based management for the most important people in the system – they people who have to make it work in real life, not in an international conference room.

The alternative is to accept the fact that broad indicators will only be useful in the very long term, that useful logic models and theories of change which are developed at the field level, will produce indicators which will be relevant to the people who need to use them, and therefore more likely to see actual data collection. They will also be more likely to actually be used in the design and management of development activities.

If we want broad country programme or agency-wide indicators on results, we should be starting the analysis with what the development professionals on the ground tell us will work, and be useful to them.

The bottom line:

Sara Holzapfel’s analysis of standard and custom indicators is a useful reminder of the complexity of trying to apply standard international indicators across projects and a valuable compilation of indicators from different agencies.

_____________________________________________________________

GREG ARMSTRONG

Greg Armstrong is a Results-Based Management specialist who focuses on the use of clear language in RBM training, and in the creation of usable planning, monitoring and reporting frameworks. For links to more Results-Based Management Handbooks and Guides, go to the RBM Training website.

Sara Holzapfel’s analysis of standard and custom indicators is a useful reminder of the complexity of trying to apply standard international indicators across projects and a valuable compilation of indicators from different agencies.

_____________________________________________________________